Recently, OpenAI announced that its GPT Store will finally launch next week, allowing users to sell and share custom AI agents created using OpenAI’s GPT-4 large language model on the platform. Additionally, an email has been sent to individuals registered as GPT Builders, urging them to ensure their GPT creations comply with brand guidelines and suggesting they make their models public.

The GPT Store made its debut at the OpenAI Developer Conference in November, revealing the company’s plan to allow users to build AI agents using the powerful GPT-4 model. This feature, exclusive to ChatGPT Plus users and enterprise subscribers, enables individuals to create personalized versions of ChatGPT-style chatbots. The upcoming store will allow users to share their GPT creations and profit from them. OpenAI envisions compensating creators of GPT based on the usage of AI agents on the platform, although details about the payment structure have not yet been disclosed. With the official announcement, users are eagerly anticipating the opportunity to showcase their unique GPT creations and benefit from them.

This announcement immediately turned AI Agent into a hot search term on the internet. AI experts and scientists predict that 2023 will be a breakout year for large language models, and 2024 will be the year of the AI Agent explosion, marking it as the best form for the initial application of large language models.

Bill Gates, in an article titled “Artificial Intelligence Will Completely Change the Way You Use Computers,” detailed the potential impact of AI Agents on humanity. “In the next five years, this will completely change. You won’t need different apps for different tasks. You’ll just tell your device what you want to do in everyday language. Depending on how much information you choose to share with it, the software will be able to respond personally, as it will have a rich understanding of your life. Soon, anyone who goes online will be able to have a personal assistant powered by AI far beyond today’s technology,” said Bill Gates.

AI Agent

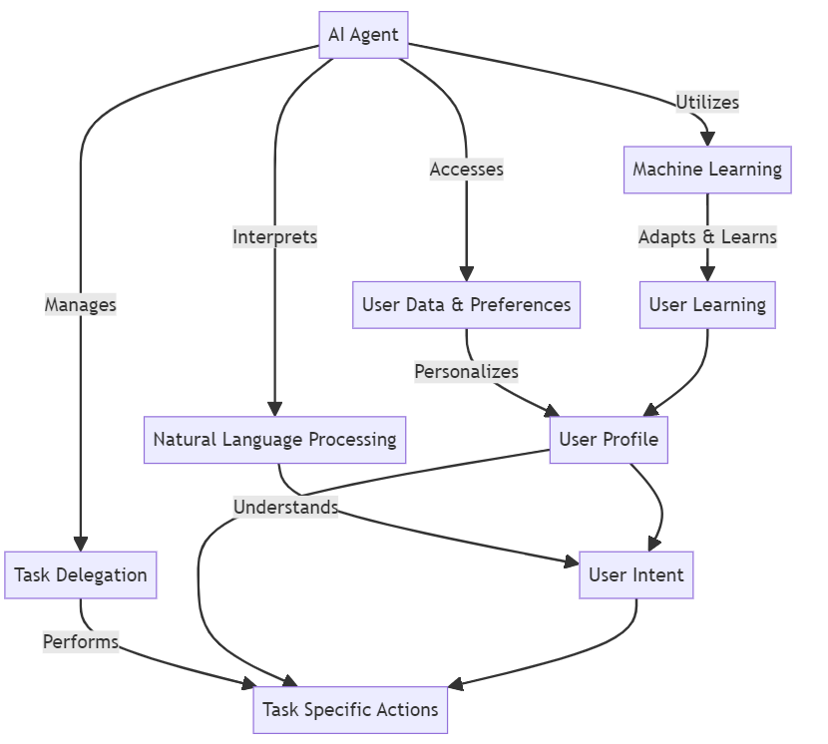

The framework of an AI Agent is a complex system centered around the AI Agent itself, utilizing multiple key components to understand and execute user commands.

Initially, the AI Agent interprets user requests made in everyday language through its Natural Language Processing (NLP) component. This enables the AI Agent to comprehend user intentions and relay this information to the task delegation component. Task delegation is responsible for managing and executing specific tasks such as scheduling, information searching, or other activities requested by the user.

Simultaneously, the AI Agent relies on machine learning technologies to adapt and learn from user behavior patterns and preferences. This learning process allows the AI Agent to continually improve its response to user needs over time.

Additionally, the AI Agent accesses and utilizes personal data and preference settings stored in user profiles. The information in these profiles helps the AI Agent personalize its services, ensuring that the solutions and recommendations it provides are as closely aligned with the user’s actual needs as possible.

Throughout this process, the AI Agent constantly coordinates and interacts between natural language understanding, task execution, learning user behavior, and personalizing services. This dynamic interaction ensures that the AI Agent can respond to user needs in a highly personalized and efficient manner, thereby offering a more intelligent and intuitive user experience.

Below is a simplified diagram of the AI Agent’s workflow:

The AI Agent is the central node, with components serving specific purposes:

○ Natural Language Processing: Interprets user inputs in natural language.

○ Task Delegation: Manages and executes specific tasks.

○ Machine Learning: Adapts and learns from user behavior and preferences.

○ User Data & Preferences: Accesses and utilizes personal data and preference settings of users.

These components are further ed to other functionalities:

○ User Intent: Understood and interpreted by Natural Language Processing.

○ Task-Specific Actions: Executed by Task Delegation.

○ User Learning: Machine Learning adapts and learns from user behavior.

○ User Profile: Personalized by user data and preferences, influencing the understanding of user intent and task execution.

AI Agent: The Opportunity for Implementing Large Language Models

With the rapid advancement of machine learning and deep learning technologies, large language model like GPT-4 and BERT have achieved unprecedented complexity and accuracy. These models are capable of handling complex natural language processing tasks, offering more precise and personalized services.

As a direct application of these technologies, AI Agents can effectively harness the capabilities of these large language model to provide users with a smarter interactive experience. As technology progresses, users expect to interact with technology in a more natural and intuitive way. AI Agents, through natural language processing and machine learning, can communicate and interact with users in a way that closely resembles human interaction, a method far more engaging than traditional graphical user interfaces or command-line interfaces.

In the digital age, both individuals and businesses face the challenge of processing vast amounts of data. AI Agents can utilize large language model for efficient data analysis and processing, offering personalized services and recommendations. This capability is crucial for improving work efficiency, optimizing user experiences, and achieving precise marketing strategies. From homes to businesses, from personal assistants to customer service, the demand for intelligent services is rapidly growing. AI Agents can provide support in multiple domains, including health consultation, educational tutoring, shopping assistance, and enterprise productivity tools, making them an ideal platform for realizing intelligent services.

AI Agent as a Bridge to Large Language Models

The bridge between AI Agents and large language models indeed involves the standardization of corporate data flow collection, which typically requires professional data companies for deployment and management.

Enterprises often possess vast amounts of data from various sources, including customer interaction data, transaction records, market analysis, and more. Professional data companies can assist enterprises in collecting, integrating, and cleaning this data, ensuring its quality and consistency to provide a reliable data source for AI Agents.

To enable AI Agents to effectively process and analyze data, it’s necessary to standardize the data into certain formats and standards. This includes unifying data structures, ensuring data integrity and accuracy, and addressing missing or anomalous data.

After collecting and standardizing data, the next step is to use large language model for in-depth analysis to extract valuable insights and knowledge. This can help enterprises better understand customer behavior, optimize business processes, predict market trends, and more.

Finally, the processed data is integrated into the AI Agent. This may involve customizing AI models to fit specific business needs or interfacing the data with the AI Agent for real-time data flow and analysis. Additionally, enterprise data is dynamic, necessitating ongoing data management and model optimization. Professional data companies can provide continuous support, ensuring that data and AI models are constantly updated and improved over time.

The process of data standardization and collection requires a professional data company team. DataOceanAI, with nearly 20 years of deep experience in the AI training data field and deep cooperation with 880 large technology enterprises and research institutions worldwide, provides professional data solution design when enterprises enter new fields, expand new business branches, or enter new overseas markets. This helps enterprises quickly build data solutions that match their algorithm models and explore new business areas.

Dataocean AI is redefining the way businesses interact with language. We offer a suite of cutting-edge Large Language Models (LLMs) trained on massive datasets of text and code, allowing them to understand and respond to human language in nuanced and meaningful ways.

Learn more Large Language Model (LLM) Data Services : AI Training Data Services