Nowadays, AI-assisted interactive education has become a key driver in enhancing learning efficiency and teaching quality. Language learning is not only a means of acquiring knowledge but also meets the growing communication needs of people. Moreover, it serves as a bridge to connect the world and understand different cultures.

At the application level, people can learn languages through numerous apps. Users can study the pronunciation, characters, and even pinyin of the target language. Additionally, the speech recognition feature can identify the user’s pronunciation and determine its accuracy. Users can tailor their learning tasks based on their weaknesses, greatly enhancing both the enjoyment and efficiency of learning.

At the technical level, current text interaction models such as ChatGPT, and speech interaction models like Whisper, wavLM, and wav2vec 2.0 have demonstrated excellent recognition capabilities for mainstream languages such as English and Chinese. I tested their recognition capabilities with various dialects.

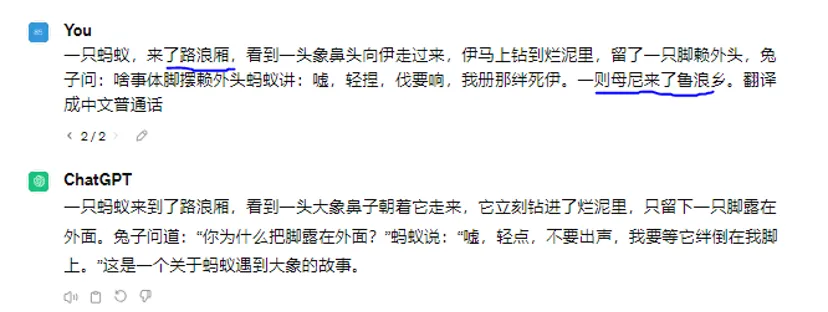

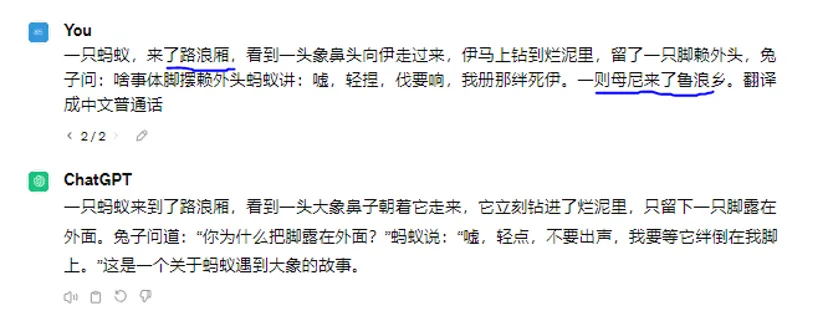

Round 1 Shanghai dialect Challenge

First, I asked ChatGPT to translate a Shanghainese phrase. ChatGPT translated “路浪厢” as a place name, but in Shanghainese, it actually means “on the road.”

Recognition result: Recognition failed.

Round 2

Wuhan dialect Challenge

Following that, I invoked the wavLM model to recognize Wuhanese. The above is the authentic transcript, and below is the recognition result, showing that wavLM isn’t particularly proficient in recognizing Wuhanese.

Recognition result: Recognition failed.

While these models excel in recognizing and processing standard speech and mainstream languages, their performance still needs improvement when dealing with dialects. Due to the limited inclusion of dialectal data in training datasets, the models’ ability to understand and process non-standard speech remains constrained.

How to leverage high-quality parallel corpora to enhance the recognition capability of large models:

A high-quality parallel corpus that enhances the recognition capability of large models should include abundant dialectal speech data paired with accurate translations into standard languages. Such a corpus can address the following challenges:

1. Increase Data Diversity and Coverage**: Expand the training set with dialects and regional language data to improve the model’s understanding and generalization of non-mainstream languages.

2. Enhance Recognition Accuracy: Provide precise correspondences between dialectal speech and standard language texts, allowing the model to learn specific expressions and usages for more accurate recognition and translation of dialects.

3. Optimize Model Training: Use dialectal corpora for supervised learning and fine-tuning to further optimize model performance.

4. Enhance Model’s Cultural Sensitivity**: Include dialectal data with local characteristics and cultural elements to improve the model’s cultural sensitivity and adaptability.

5. Utilize Adapter Technology: Adapt existing pre-trained models to specific dialects and domains using adapter techniques, enhancing the model’s capability to understand and apply dialects without requiring full retraining of the entire model.

How to obtain high-quality speech parallel corpora:

Dataocean AI’s high-quality speech parallel corpora include speech and text data for multiple languages and their corresponding translations, covering various languages and dialects to meet global language learning needs, providing convenience for learners from diverse cultural backgrounds.

– High Accuracy: Each speech record includes precise textual translations, ensuring learners receive correct feedback.

– Diversity and Comprehensive Coverage: Encompassing various scenarios and languages, meeting diverse learning needs, and widely applicable in various learning environments.

Shanghai dialect