During yesterday’s OpenAI DevDay, an array of exciting new features was revealed to the audience. The updated GPT-4 Turbo now boasts six significant enhancements, including a broader scope for context comprehension, improved model governance, enriched knowledge database, integrated multimodal functions, the ability for detailed model tuning, and raised thresholds for processing speeds. Moreover, the event saw the introduction of a bespoke ChatGPT function. This allows users to tailor individual ChatGPT instances to their specific requirements, even without any programming background, dubbed as GPTs (the plural of GPT). These custom GPTs will be a feature of the soon-to-be-launched GPT Store, enabling anyone to effortlessly craft a unique ChatGPT without needing to code.

Now ChatGPT can not only chat but also support image generation, voice interaction, text analysis, academic paper interpretation, web browsing, advanced data analysis and other functions. Let’s take a look at how ChatGPT’s powerful multimodal features can enable us to use them for better learning and work.

01 Image Generation

ChatGPT-4 is a language model primarily used for understanding and generating text-based responses. It can provide detailed descriptions of images, interpret descriptions of images, and offer detailed s for image generation, but it does not create images directly within its own framework.

However, OpenAI has also developed other specialized image generation models such as DALL-E, which is designed to create images based on textual descriptions. This means that while ChatGPT-4 can create text s for images, another system like DALL-E would be used to actually generate the images based on those s.

Integration of ChatGPT-4 with DALL-E or similar image generation models could provide a seamless experience, where a user can describe an image in a conversation with ChatGPT-4, and then the system could use that description to generate an image with a separate image generation model.

The first two images in this article were generated by GPT4-DALL-E, and it is necessary to switch to DALL-E3 mode before using it. For example, below is how the editor asked it to generate what Beijing would look like in ten years, and the effect is truly fascinating:

The steps to create an image and the method of seeking text information are similar, involving dialogic interaction for generation:

Image creation : The user provides ChatGPT-4 with a descriptive text , detailing the desired image content.

interpretation: ChatGPT-4 interprets and may refine the to ensure it is clear and detailed enough for image generation.

Image generation: The refined is passed to an image generation model like DALL-E, which then creates the image based on the provided description.

Demonstration: The generated image is presented to the user either as an independent result or within the context of a ChatGPT conversation.

02 Web Browsing

The introduction of web browsing capabilities represents a key enhancement to the functionalities of ChatGPT-4 prior to this update. Before this update, ChatGPT-4 operated in a closed environment, relying only on data trained up to the knowledge cutoff in April 2023. Users could pose questions and get answers based on the information available then, but AI could not access or retrieve real-time updated information from the internet.

With the advent of web browsing capabilities (available exclusively for ChatGPT Plus users through a laboratory feature), the AI can now perform real-time web searches. This enables it to extract current information, providing users with more accurate and up-to-date responses. For example, it can access the latest news articles, forum threads, or recent statistics, which was not possible before.

ChatGPT Plus users will be granted access to the web browsing capabilities. This marks a significant expansion of ChatGPT functionalities, which now includes internet connectivity. It allows Plus users to instruct the AI to navigate web pages, gather information, and interact with online content in real time, significantly expanding the range of tasks ChatGPT can perform. This could change the way users interact with artificial intelligence, making it a more powerful tool for research, learning, and entertainment.

03 Advanced data analysis

The advanced data analysis capabilities of ChatGPT-4 include understanding, processing, analyzing, and visualizing data in multiple aspects. These functions can help users solve various data analysis problems. Specifically, these capabilities include:

1. Data Understanding and Processing

○ Interpreting the structure and content of datasets.

○ Cleaning data, including dealing with missing values, outliers, and duplicates.

○ Performing data transformations, such as date format changes, data type conversions, encoding, and decoding.

2. Statistical Analysis

○ Descriptive statistical analysis, providing summaries of data such as means, medians, standard deviations, etc.

○ Inferential statistical analysis, such as hypothesis testing, confidence interval estimation, etc.

○ Correlation analysis to assess the strength and direction of relationships between different variables.

3. Machine Learning

○ Making predictions and classifications using various machine learning algorithms.

○ Conducting feature selection and model optimization.

○ Interpreting the results and performance of models.

4. Data Visualization

○ Creating charts and visualizations, such as line graphs, bar charts, scatter plots, etc.

○ Generating complex graphics with advanced visualization tools like Seaborn or Plotly.

○ Using visualizations to help understand and present the results of data analysis.

5. Natural Language Processing (NLP)

○ Preprocessing text data, such as tokenization, stemming, removing stop words, etc.

○ Text classification, sentiment analysis, topic modeling, and more.

○ Entity recognition, relation extraction, etc.

6. Time Series Analysis

○ Analyzing time-series data, including identifying trends, seasonality, and cyclicality.

○ Predicting future values, for instance, using ARIMA, seasonal decomposition forecasts, etc.

○ Detecting anomalies in time-series data.

7. Interactive Analysis

○ Interacting with users to understand specific data analysis needs.

○ Dynamically adjusting analysis strategies based on user questions.

○ Providing step explanations and result interpretations to help users understand complex analysis processes.

These capabilities make ChatGPT-4 not just a chatbot, but also a powerful data analysis tool. Users can communicate with it using natural language, which allows for complex data analyses without the need for specialized data analysis or programming skills.

Below is the amplitude distribution chart of the representation data extracted from the pre-trained model, analyzed using this feature:

04 PDF Reader

Due to the lack of a PDF upload feature in GPT-4, it is necessary to use a web analysis plugin to analyze the content of PDF files. WebPilot is a powerful ChatGPT plugin that allows users to provide one or multiple URLs, and optionally add interactive requests, extract specific information, or specify how the content of the URL should be processed. This plugin offers users a customized web browsing experience, enabling them to navigate and handle web content according to their own needs.

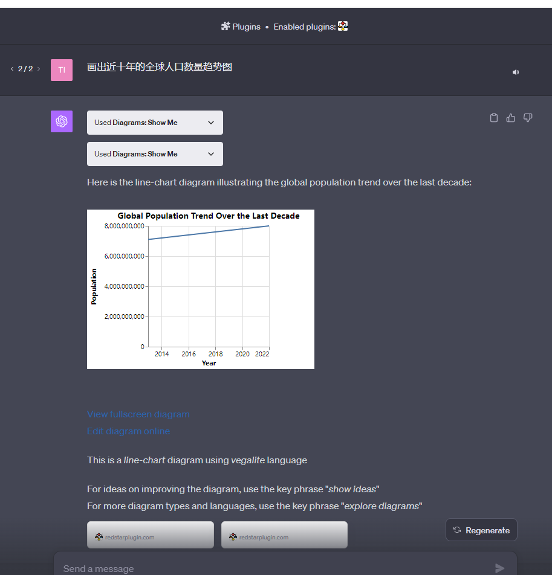

05 Diagrams

GPT-4 does not have built-in drawing software; it requires the addition of the plugin ‘Diagrams: show me’ to assist with drawing.

Below is the trend of the global population numbers over the past decade:

As LLM like ChatGPT continues to open source and push the envelope, more and more companies will be impacted. In the competitive wave of AI, there are both challenges and opportunities.

For example, the voice interaction feature integrated into ChatGPT is realized by the Whisper basic voice model, and Whisper is a universal, robust voice recognition model. If used directly for applications such as wake words, smart homes, and in-vehicle systems, there may be domain mismatch issues. Therefore, it is necessary to migrate the original Whisper model, transferring its superior recognition capabilities to specific datasets and application scenarios, which require a large amount of domain-specific data. Data is the cornerstone of AI research and development.

DataOceanAI provides a vast array of vertical datasets, including multilingual, multi-scenario, and multi-application speech data, which can be used for the transfer and fine-tuning of Whisper. In addition, we offer an extensive collection of images and multimodal data, which can be utilized for the fine-tuning and adaptation of numerous other models.