With the development of Generative Artificial Intelligence (AICG), AI virtual anchors have become the protagonists on platforms such as Amazon, Tiktok, Youtube and Instagram. Compared to human anchors, AI virtual personalities in live broadcasting possess characteristics of professionalism, emotional stability, and tirelessness 24/7.

They offer a high-quality, cost-effective, and highly interactive form of live streaming, efficiently meeting the demands for optimizing user experiences. As a result, they have emerged as dark horses in the world of livestreaming e-commerce.

Virtual personalities refer to digital avatars that exist on display devices, possessing human-like appearances, behaviors, and thought processes. In addition to resembling real humans, many anchors have a 2D animated style, which appeals to the aesthetic preferences of a large number of young people. Virtual anchors have virtually taken over all short video and e-commerce platforms.

The key to the popularity of virtual anchors lies in their “glamorous appearance and captivating personalities.” This is also an essential requirement for creating virtual anchors.

Appealing Appearance

Currently, the creation of virtual characters can be mainly divided into two categories: 2D virtual characters and 3D virtual characters.

2D virtual characters are modeled based on real images and closely resemble real people. Their appearance, such as clothing and hairstyles, cannot be freely adjusted, and their image is relatively fixed. 2D virtual characters are limited to 2D presentation and cannot be used in 3D environments, VR, AR, or games.

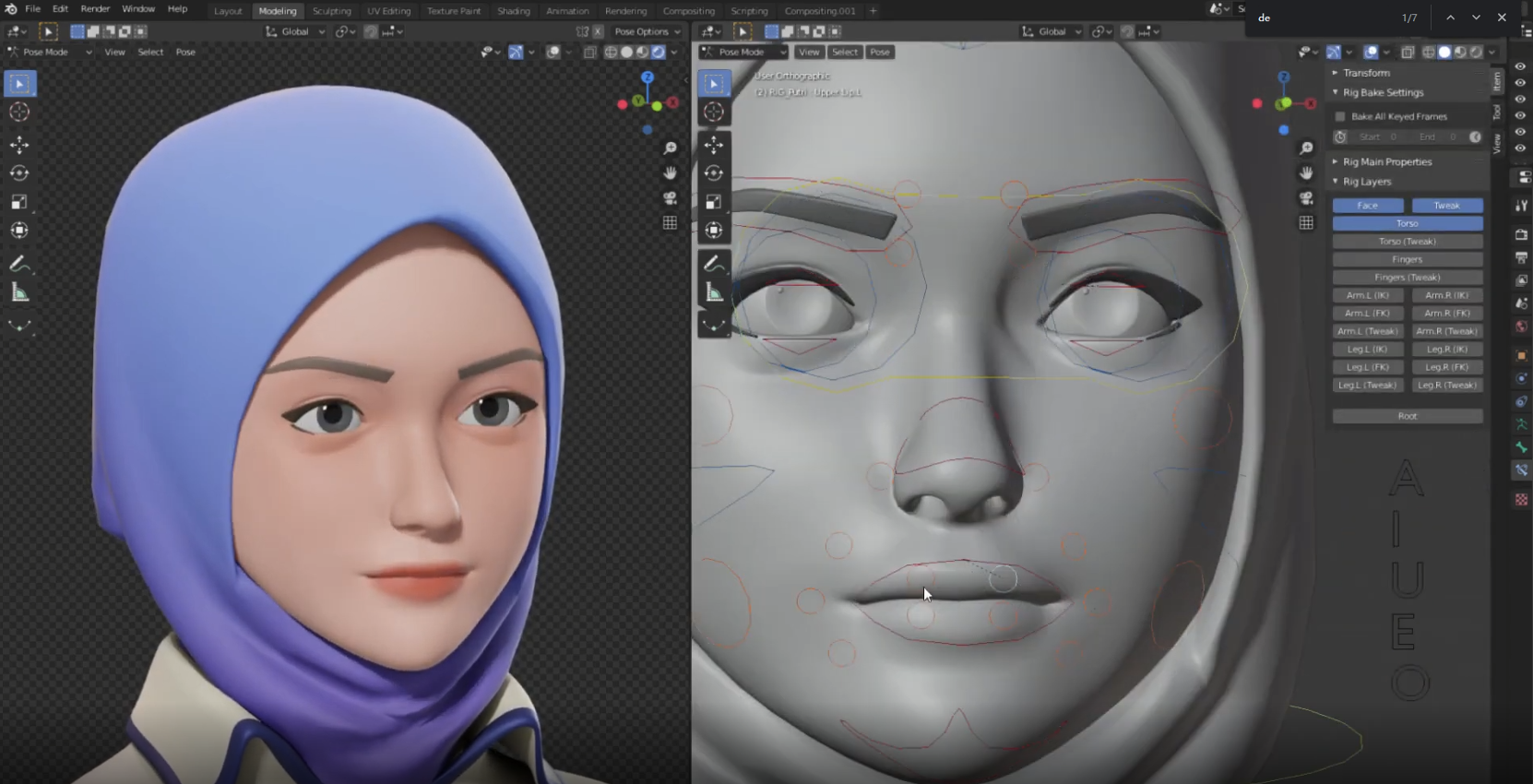

On the other hand, 3D virtual characters come in a variety of styles and can have flexible stylized appearances. They have a wide range of applications, primarily in content creation, IP development, and creative production. 3D virtual characters are characterized by versatility, adaptability to various forms, and the ability to be freely adjusted. They can be seamlessly integrated into real-world scenes to enhance realism further.

From a technical perspective, 2D virtual characters are often created using static scanning techniques. Full-scale real-life photos are captured using photography equipment, and then matrix scanning is performed in software to generate 2D three-dimensional images. Static scanning technology is cost-effective and requires only a small amount of data and photography to create 2D virtual character images.

However, the majority of virtual digital characters currently in existence are 2D virtual characters, such as 24-hour live-streaming virtual digital personas. In contrast, the modeling of 3D virtual characters demands higher requirements in terms of software and technology.

It involves dynamic scanning techniques, including facial feature recognition, spatial transformation, model reconstruction, and skeletal deformation, to synthesize multi-modal 3D models, encompassing realistic and cartoon-like types.

As virtual character technology advances, costs are gradually decreasing, especially for 2D video-style virtual characters. However, for 3D virtual characters, technical limitations and high costs remain challenges faced by many enterprises. This has given rise to a new emerging profession – virtual character designers.

Reference from: https://www.cnshuziren.com/html/249.html

The process known as “face sculpting” involves transforming static into dynamic precision.

Within 3D modeling software, a clear representation of a static 3D facial model is displayed. To introduce motion, various dimensions for each facial feature are made editable. According to virtual character designer, by clicking on different regions such as the forehead, eyebrows, eyes, ears, nose, and mouth, every facial feature area is broken down into specific operational dimensions.

The design of the eyes is particularly important, as they provide virtual beings with a human-like appearance. Shifting the interface to the eye region reveals nearly 30 finely detailed dimensions, enabling adjustments that match diverse facial characteristics.

After the virtual character designer’s work is complete, the facial model proceeds to stages such as makeup application and clothing coordination.

Virtual makeup artists and costume designers employ specialized software to enhance the virtual character’s appearance through makeup, accessories, clothing, etc., gradually creating distinct virtual personas.

Intriguing Personality

So, how can intriguing personalities be infused into virtual beings? Or rather, what is the ultimate form of virtual beings? Essentially, virtual beings remain a form of “human imitation” technology.

To provide virtual beings with an experience akin to that of humans, there are two main methods: bringing people into virtual spaces or introducing virtual beings into real spaces. The former corresponds to the metaverse market, while the latter involves technologies such as robots or holographic projections. This constitutes the ultimate form of virtual being products.

Currently, simpler virtual beings are driven by pre-written scripts, while intelligent virtual beings are powered by AI. The language models and speech recognition and synthesis models within virtual beings are AI-driven.

Through holographic projection technology, highly intelligent virtual beings can be projected into our real world, allowing for authentic interactions.

Achieving this requires AI to possess intricate self-learning and emotional comprehension capabilities, enabling accurate understanding of the meaning, emotions, and intentions behind human language.

Through holographic projection technology, highly intelligent virtual beings can present themselves with a strikingly realistic appearance within our surroundings. This enhances interactions by introducing multiple layers of reality, allowing individuals to engage in face-to-face conversations, collaborations, and discussions with virtual beings within the real world. Virtual beings cease to be purely digital entities, instead becoming palpable elements within our field of vision and the real environment.

The captivating aspects of our real human personalities are evident in our speech and behavior. For virtual beings, these traits must be cultivated through data-driven AI model training. This is especially true for virtual anchors playing roles, who require specialized linguistic skills. Thus, targeted data training models are essential to guide virtual beings’ speech and behavior.

Gathering such data is particularly challenging due to the complexity of environments and scenarios. Professional data companies are needed for the collection, cleansing, and labeling processes.

The AI model training of data for corresponding anchors is where the soul of virtual anchors lies.

King-IM-071 Facial Expression Image Corpus >>>Learn More

King-TTS-290 British English Female Voice Synthesis Corpus for Live Broadcasting.

King-TTS-291 British English Male Voice Synthesis Corpus for Live Streaming

King-TTS-292 British English Female Voice Synthesis Corpus for Advertising and Marketing

King-TTS-293 British English Female Voice Synthesis Corpus for Training Courses

King-TTS-294 British English Male Voice Synthesis Corpus for Training Courses

King-TTS-295 British English Male Voice Synthesis Corpus for Customer Service

King-TTS-296 British English Male Voice Synthesis Corpus >>>Learn More

King-TTS-297 British English Male Voice Synthesis Corpus >>>Learn More

References

[1] https://pdf.dfcfw.com/pdf/H3_AP202210141579159128_1.pdf?1665767294000.pdf

[2] https://www.cnshuziren.com/html/249.html